Articles

- Page Path

- HOME > Restor Dent Endod > Volume 43(2); 2018 > Article

- Open Lecture on Statistics Statistical notes for clinical researchers: simple linear regression 1 – basic concepts

-

Hae-Young Kim

-

Restor Dent Endod 2018;43(2):e21.

DOI: https://doi.org/10.5395/rde.2018.43.e21

Published online: April 12, 2018

Department of Health Policy and Management, College of Health Science, and Department of Public Health Science, Graduate School, Korea University, Seoul, Korea.

- Correspondence to Hae-Young Kim, DDS, PhD. Professor, Department of Health Policy and Management, Korea University College of Health Science, and Department of Public Health Science, Korea University Graduate School, 145 Anam-ro, Seongbuk-gu, Seoul 02841, Korea. kimhaey@korea.ac.kr

Copyright © 2018. The Korean Academy of Conservative Dentistry

This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-Commercial License (https://creativecommons.org/licenses/by-nc/4.0/) which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

- 1,736 Views

- 38 Download

- 5 Crossref

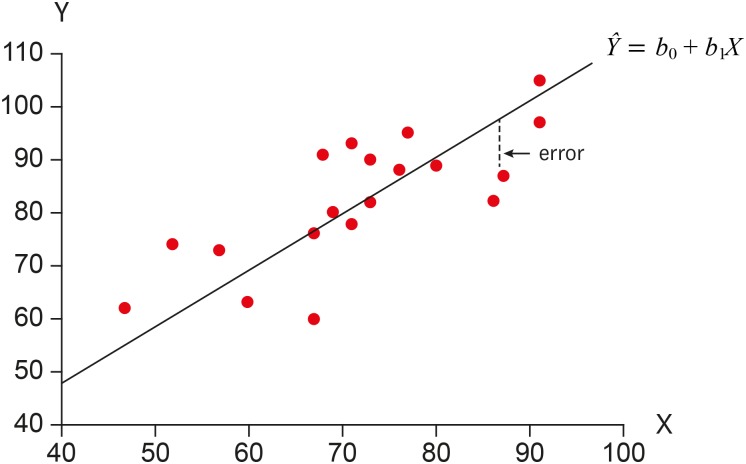

REGRESSION LINE AND REGRESSION MODEL

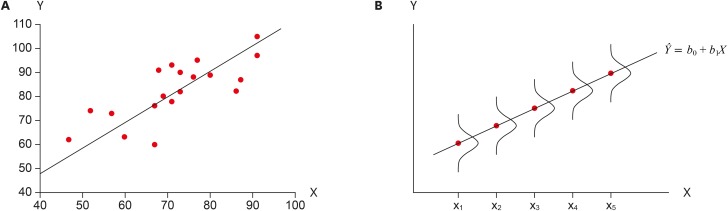

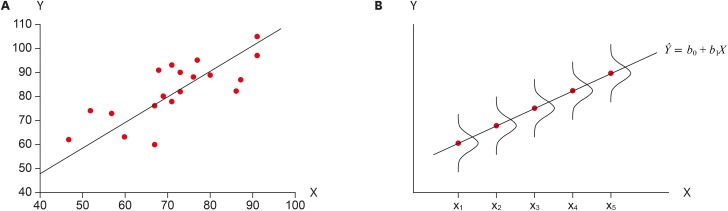

Linear: The means of X subgroups are on the straight line representing the linear relationship between X and Y. In Figure 1b, points on the line represent subgroup means and they are connected as a straight line.

Independent: The observations are independent to each other, which is a common assumption for general classical statistical models.

Normal: The X subgroups have normal distribution. Based on this assumption, we could express the full nature of a subgroup only using the mean and variance without any further explanation.

Equal variance: All the subgroup variances are equal. Based on this assumption, we can simplify the calculation procedure as obtaining a common variance estimate instead of calculating each subgroup variance separately.

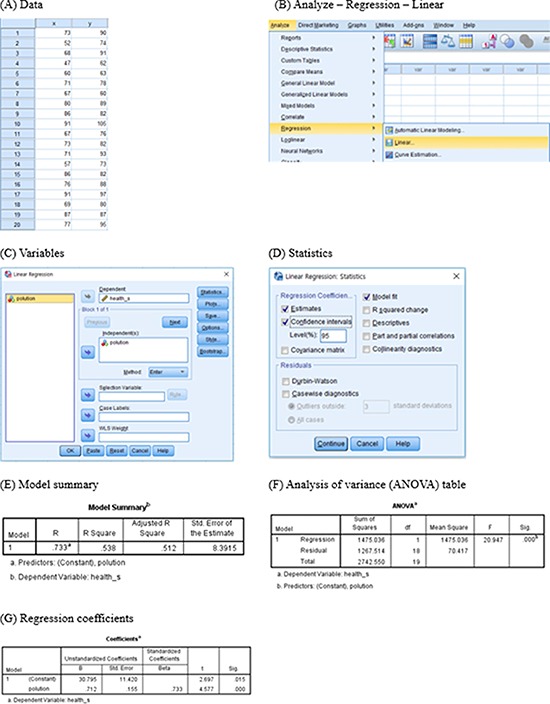

LEAST SQUARES METHOD

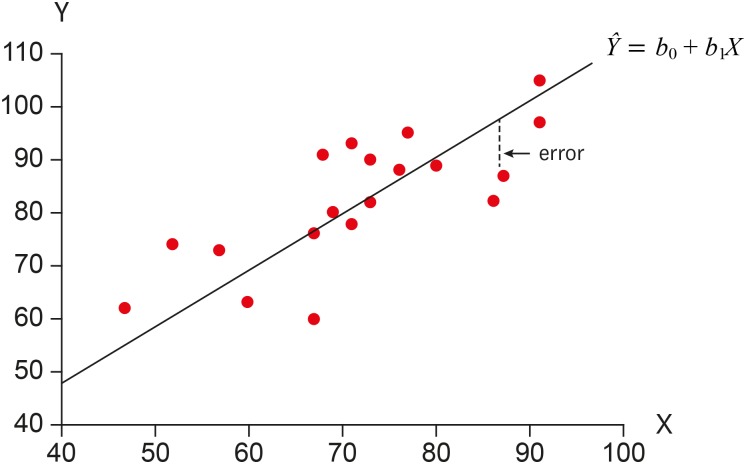

Calculation of least squares estimators of slope and intercept in simple linear regression.

- 1. Kim HY. Statistical notes for clinical researchers: covariance and correlation. Restor Dent Endod 2018;43:e4.ArticlePubMedPMCPDF

- 2. Galton F. Regression towards mediocrity in hereditary stature. J Anthropol Inst G B Irel 1886;15:246-263.Article

- 3. Daniel WW. Biostatistics: basic concepts and methodology for health science. 9th ed. New York (NY): John Wiley & Sons; 2010. p. 410-440.

REFERENCES

Appendix

Tables & Figures

REFERENCES

Citations

- Statistics Primer for Radiologists: Part 2—Advanced statistics for Enhancing Diagnostic Precision and Research Validity

Adarsh Anil Kumar, Santhosh Kannath, Jineesh Valakkada

Indian Journal of Radiology and Imaging.2025; 35(S 01): S74. CrossRef - Influence of the radius of Monson’s sphere and excursive occlusal contacts on masticatory function of dentate subjects

Dominique Ellen Carneiro, Luiz Ricardo Marafigo Zander, Carolina Ruppel, Giancarlo De La Torre Canales, Rubén Auccaise-Estrada, Alfonso Sánchez-Ayala

Archives of Oral Biology.2024; 159: 105879. CrossRef - Exploring the Perceived Difficulty and Importance of Lower Limb Physical Activities for People With and Without Osteoarthritis: A Discrete Choice Experiment

Andrés Pierobon, Will Taylor, Richard Siegert, Robin Willink, Kim Bennell, Kelli Allen, Jackie Whittaker, Jake Pearson, Marrissa Norton, Jane Clark, Hilal Ata Tay, Dieuwke Schiphof, Ben Darlow

Musculoskeletal Care.2024;[Epub] CrossRef - Statistical notes for clinical researchers: simple linear regression 3 – residual analysis

Hae-Young Kim

Restorative Dentistry & Endodontics.2019;[Epub] CrossRef - Statistical notes for clinical researchers: simple linear regression 2 – evaluation of regression line

Hae-Young Kim

Restorative Dentistry & Endodontics.2018;[Epub] CrossRef

Figure 1

Figure 2

Calculation of least squares estimators of slope and intercept in simple linear regression.

| No | X | Y | X−X̿ | Y−Y̿ | (X−X̄)2 | (X−X̄)(Y−Ȳ) |

|---|---|---|---|---|---|---|

| 1 | 73 | 90 | 0.55 | 7.65 | 0.30 | 4.21 |

| 2 | 52 | 74 | −20.45 | −8.35 | 418.20 | 170.76 |

| 3 | 68 | 91 | −4.45 | 8.65 | 19.80 | −38.49 |

| 4 | 47 | 62 | −25.45 | −20.35 | 647.70 | 517.91 |

| 5 | 60 | 63 | −12.45 | −19.35 | 155.00 | 240.91 |

| 6 | 71 | 78 | −1.45 | −4.35 | 2.10 | 6.31 |

| 7 | 67 | 60 | −5.45 | −22.35 | 29.70 | 121.81 |

| 8 | 80 | 89 | 7.55 | 6.65 | 57.00 | 50.21 |

| 9 | 86 | 82 | 13.55 | −0.35 | 183.60 | −4.74 |

| 10 | 91 | 105 | 18.55 | 22.65 | 344.10 | 420.16 |

| 11 | 67 | 76 | −5.45 | −6.35 | 29.70 | 34.61 |

| 12 | 73 | 82 | 0.55 | −0.35 | 0.30 | −0.19 |

| 13 | 71 | 93 | −1.45 | 10.65 | 2.10 | −15.44 |

| 14 | 57 | 73 | −15.45 | −9.35 | 238.70 | 144.46 |

| 15 | 86 | 82 | 13.55 | −0.35 | 183.60 | −4.74 |

| 16 | 76 | 88 | 3.55 | 5.65 | 12.60 | 20.06 |

| 17 | 91 | 97 | 18.55 | 14.65 | 344.10 | 271.76 |

| 18 | 69 | 80 | −3.45 | −2.35 | 11.90 | 8.11 |

| 19 | 87 | 87 | 14.55 | 4.65 | 211.70 | 67.66 |

| 20 | 77 | 95 | 4.55 | 12.65 | 20.70 | 57.56 |

| X̄ = 72.45 | Ȳ = 82.35 | ∑ = 2,912.95 | ∑ = 2,072.85 |

KACD

KACD

ePub Link

ePub Link Cite

Cite