Statistical notes for clinical researchers: Sample size calculation 1. comparison of two independent sample means

Article information

Asking advice about sample size calculation is one of frequent requests from clinical researchers to statisticians. Sample size calculation is essential to obtain the results as the researcher expects as well as to interpret the statistical results as reasonable one. Usually insignificant results from studies with too small sample size may be subjects to suspicion about false negative, and also significant ones from those with too large sample size may be subjects to suspicion about false positive. In this article, the principles in sample size calculation will be introduced and practically some examples will be displayed using a free software, G*Power.1

Why sample size determination is important?

Sample size determination procedure should be performed prior to an experiment in most clinical studies. To draw the conclusion of an experiment, we usually interpret the p values of significance tests. A p value is directly linked to the related test statistic calculated by using standard errors, which is a function of sample size and standard deviation (SD). Therefore, the results of significance test differ depending on the sample size. For example, in comparison of two sample means, the standard error can be expressed as the standard deviation multipled by root-squared 2/sample sizes (SD /  ), if equal sample size and equal variance between two groups are assumed. When the sample size is inappropriate, our interpretations based on p values could not be reliable. If we have too small sample size, we are apt to find a small test statistic, a large p value, and statistical insignificance even when the mean difference is substantial. In contrast, larger sample size may lead into a larger test statistic, a smaller p value, and statistical significance even when the mean difference is just trivial. A statistical significance test result may be unreliable when a sample size is too small, and it may be clinically meaningless when too large. Therefore, to make the significance test reliable and clinically meaningful, we need to plan a study with an appropriate sample size. The previous article about effect size in Statistical Notes for Clinical Researchers series provided a more detailed explanation about this issue.2

), if equal sample size and equal variance between two groups are assumed. When the sample size is inappropriate, our interpretations based on p values could not be reliable. If we have too small sample size, we are apt to find a small test statistic, a large p value, and statistical insignificance even when the mean difference is substantial. In contrast, larger sample size may lead into a larger test statistic, a smaller p value, and statistical significance even when the mean difference is just trivial. A statistical significance test result may be unreliable when a sample size is too small, and it may be clinically meaningless when too large. Therefore, to make the significance test reliable and clinically meaningful, we need to plan a study with an appropriate sample size. The previous article about effect size in Statistical Notes for Clinical Researchers series provided a more detailed explanation about this issue.2

Information needed for sample size determination

When we compare two independent group means, we need following information for sample size determination.

1. Information related to effect size

Basically we need to suggest expected group means and standard deviations. Those information can be obtained from similar previous studies or pilot studies. If there is no previous study, we have to guess the values reasonably according to our knowledge. Also we can calculate the effect size, Cohen's d, as mean difference divided by SD.

If variances of two groups are different, SD is given as  under assumption of equal sample size. To 2 detect smaller effect size as statistically significant, a larger sample size is needed as shown in Table 1.

under assumption of equal sample size. To 2 detect smaller effect size as statistically significant, a larger sample size is needed as shown in Table 1.

2. Level of significance and one/two-sided tests

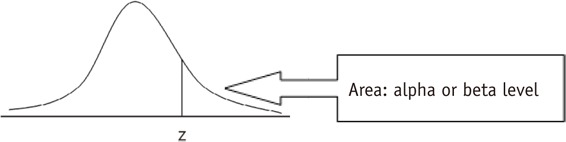

Type one error level (α - error level) or level of significance needs to be decided. The significance test may be one-sided or two-sided. For one-sided test we apply Zα for one-sided test, and Zα/2 for two-sided test. Usually for α - error level of 0.05, Zα=0.05 = 1.645 for one sided test and Zα/2=0.025 = 1.96 for two-sided test (Table 2).

3. Power level

Power is probability of rejecting null hypothesis when the alternative hypothesis is true. Power is obtained as one minus type two error (1 - β error), which means probability of accepting null hypothesis when the alternative hypothesis is true. The most frequently used power levels are 0.8 or 0.9, corresponding to Z1-β=0.80 = 0.84 and Zβ=0.90 = 1.28 (Table 2).

4. Allocation ratio

Allocation ratio of two groups needs to be determined.

5. Drop out

During the experiment period, some subjects may drop out due to various reasons. We need to increase the initial sample size to get adequate sample size at final observation of the study. If 10% of drop-outs are expected, we need to increase initial sample size by 10%.

Calculation of sample size

When we compare two independent group means, we can use the following simple formula to determine an adequate sample size. Let's assume following conditions: mean difference (mean 1 - mean 2) = 10, SD (σ) = 10, α - error level (two-sided) = 0.05 (corresponding Zα/2 = 1.96), power level = 0.8 (corresponding Zβ = 0.84), and allocation ratio N2 / N1 = 1. The sample size was calculated as 16 subjects per group.

The sample size calculation can be accomplished using various statistical softwares. Table 1 shows determined sample sizes for one-sided tests according to various mean difference, size of standard deviation, level of significance, and power level, using a free software G*Power. The determined sample size of '17' in Table 1 is found on the exactly same condition above. Larger sample size is needed as effect size decreases, level of significance decreases, and power increases.

Sample size determination procedure using G*Power

G*Power is a free software. You can download it at http://www.gpower.hhu.de/. You can determine an appropriate sample size in comparison of two independent sample means by performing the following steps.

Step 1: Selection of statistical test types:

Menu: Tests-Means-Two independent groups

Step 2: Calculation of effect size:

Menu: Determine - mean & SD for 2 groups - calculate and transfer to main window

Step 3: Select one-sided (tails) or two-sided (tails) test

Step 4: Select α - error level : one-sided α/2 = two-sided α

Step 5: Select power level

Step 6: Select allocation ratio

Step 7: Calculation of sample size

Menu: Calculate

Example 1) Effect size = 2

Two sided (tails) test

Two-sided α - error level=0.05 (one-sided α=0.025)

Power = 0.8

Allocation ratio N2 / N1 = 1.

Appropriate sample size calculated: N1 = 8, N2 = 8.

Example 2) Effect size = 1

One sided (tails) test

α - error level = 0.025

Power = 0.8

Allocation ratio N2 / N1 = 2.

Appropriate sample size calculated: N1 = 13, N2 = 25.